How Prompt Adaptation works

Prompt adaptation is a data-driven prompt optimization technique that leverages evolutionary algorithms and reinforcement learning to search for the optimal prompt for a given use case, model, and evaluation metric.

The adaptation process

Prompt adaptation works by taking your existing prompt along with an evaluation dataset and metric and automatically generating optimized prompts for one or more target models. The process involves:

-

Baseline evaluation: We evaluate your original prompt on both the origin model (if specified) and each target model using your provided evaluation dataset and metric.

-

Automated optimization: Our optimization algorithm generates and tests variations of your prompt specifically tuned for each target model's characteristics and instruction-following patterns.

-

Performance validation: Each adapted prompt is validated against your evaluation metric to ensure it maintains or improves performance on the target model.

-

Results comparison: You receive adapted prompts for each target model along with performance metrics on the test set, allowing you to compare pre- and post-optimization results.

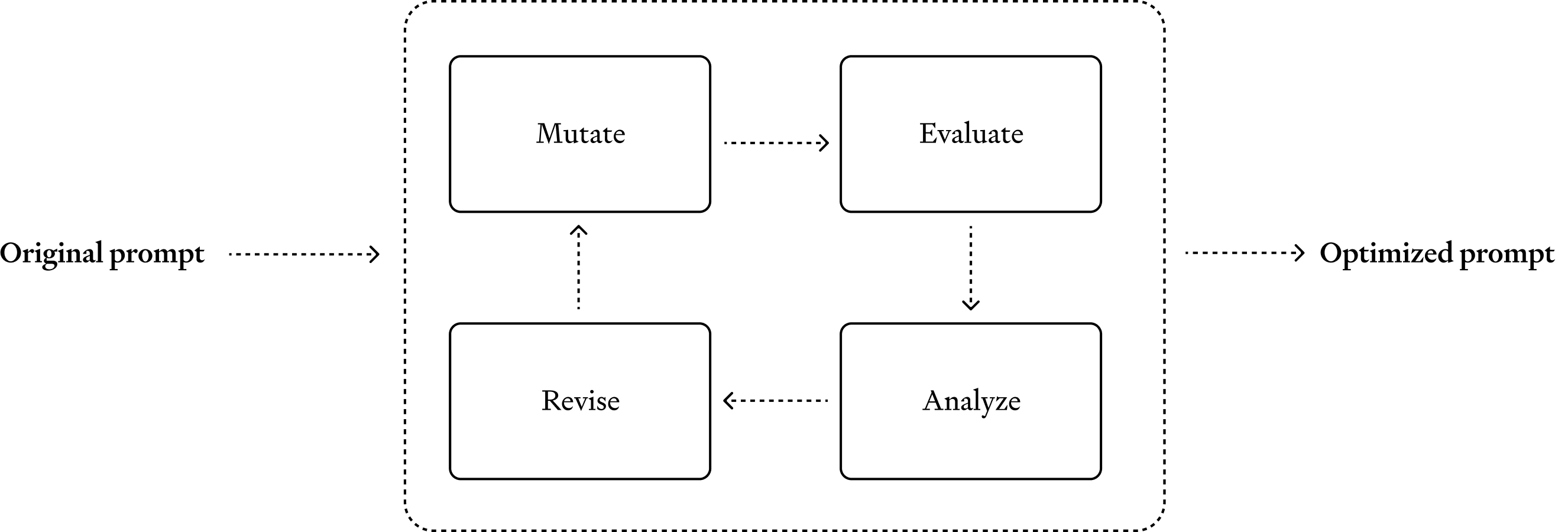

How the algorithm works

Our algorithm takes an original prompt and then uses LLM powered agents to iteratively improve on the prompt by first mutating it, then evaluating each mutation on a subset of the train set to see how they perform, analyzing the results to determine where the prompt performed well and where it failed, and finally revising the best prompts with this analysis. This process is looped over multiple times for each target model until an optimized prompt is generated and evaluated on the test set.

Not only does our algorithm achieve strong performance improvements across many use cases, models, and evaluation metrics, it is also highly data-efficient and can work with as few as three samples in the train set.

Different LLMs respond differently to the same prompt due to variations in training data, architecture, and instruction-following capabilities. Not Diamond's prompt adaptation automatically optimizes your prompts to work effectively across different models, eliminating the need for manual prompt engineering when improving accuracy or migrating between LLMs.

Updated 7 days ago