Creating test data

Creating test data to evaluate RAG workflows can require significant effort. Not Diamond provides an easy way to automatically create test queries for your RAG pipeline using your document store. All you need to do is provide a set of documents in the format of either Langchain or Llama Index documents.

Installation

Python: Requires Python 3.9+. It’s recommended that you create and activate a virtualenv prior to installing the package. For this example, we'll be installing the optional additional rag dependencies.

pip install notdiamond[rag] datasetsSetting up

Create a .env file with the API keys of the models you want to use. This example will only use OpenAI models.

OPENAI_API_KEY = "YOUR_OPENAI_API_KEY"We will also download some sample data to use for this example

mkdir data

curl https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/paul_graham/paul_graham_essay.txt -o data/paul_graham_essay.txtCreating test data from documents

In the example below, we use the document store downloaded above to demonstrate how Not Diamond's TestDataGenerator can generate test data for your RAG pipeline.

from notdiamond.toolkit.rag.llms import get_embedding, get_llm

from notdiamond.toolkit.rag.testset import TestDataGenerator

from llama_index.core import SimpleDirectoryReader

documents = SimpleDirectoryReader("data").load_data()

generator_llm = get_llm("openai/gpt-4o-mini")

generator_embedding = get_embedding("openai/text-embedding-3-small")

generator = TestDataGenerator(llm=generator_llm, embedding_model=generator_embedding)

test_data = generator.generate_from_docs(documents, testset_size=10)

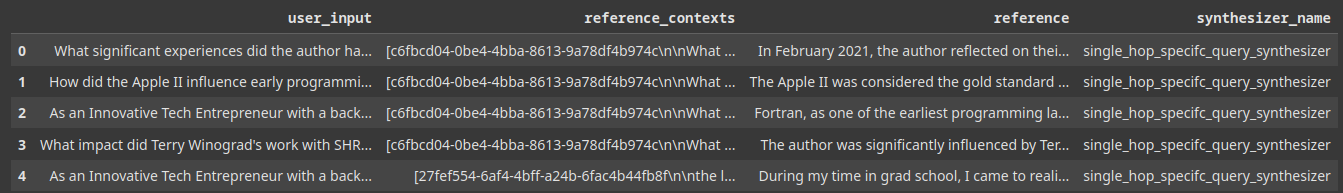

test_data.head()

In this example, we assume your documents are in a folder called data and we use Llama-Index's SimpleDirectoryReader to read all the documents in that folder.

TestDataGenerator supports both Langchain and Llama-Index documents. You can use any Langchain document loader or Llama-Index data loader to load your documents.

Underneath the hood, Not Diamond uses ragas's knowledge graph technique, where your documents are transformed into a graph of topics that are then used to synthesize test queries using LLMs and topic embeddings. For more detail, checkout their paper.

Now that we have a set of test queries in place, we can move on to automatically optimizing our retrieval parameters.

Updated 16 days ago